In our benchmark tests, OpenAI’s .Whisper is an advanced ASR system developed by OpenAI, boasting high accuracy in transcribing audio into text.Schlagwörter:Whisper ASROpenai WhisperSpeech Recognition

Evaluating Open AI’s Whisper

Evaluating OpenAI’s Whisper ASR: Performance analysis across

Evaluating OpenAI’s Whisper ASR: Performance Analysis Across Diverse Accents and Speaker Traits Skip to main content Accessibility information.Part 3: But That’s Not the Whole Story. Whisper was proposed in the paper Robust Speech Recognition via Large-Scale Weak .5 million hours of multilingual audio data, Universal-1 achieves best-in-class speech-to-text accuracy across four major languages: English, Spanish, French, and German. Unlike many of its predecessors, such as Wav2Vec 2.Whisper AI Word Error Rate is a metric used to evaluate the accuracy of automatic speech recognition systems. ? Voicebot = Conversation Design + NLU Design + ASR Design. We discovered an added bonus, auto-translation to English text, that we . OpenAI Whisper vs Google Speech-to-Text vs Amazon Transcribe: The ASR rundown. We propose a speech-based ICL (SICL) approach that leverages several in-context examples (paired spoken words and labels) from a specific .

OpenAI’s Whisper Model Crushes Google in AI Head-to-Head

In particular, Whisper excelled in accuracy for videos featuring rapid speech and English accents — and so we rolled it out to 100% of our customers. The best part? Our Deepgram Whisper Large model (OpenAI’s large-v2) starts at only $0. In OpenAI’s own words, Whisper is designed for AI researchers studying robustness, generalization, capabilities, biases and constraints of the current model.

In such scenarios, Whisper demonstrates accuracy levels approaching or matching human transcribers. The findings indicate better performance .1, Mozilla’s leading open-source speech to text dataset. Curious about how #openai #whisper does with more realistic #audio #data and especially comparing.Schlagwörter:Whisper ASROpenai Whisper

(PDF) Evaluating OpenAI’s Whisper ASR: Performance

0 (Baevski et al. As a breakthrough release designed for developers and researchers pursuing innovations in speech analysis, OpenAI‘s Whisper v3 represents unprecedented power and versatility.The codebase for Whisper ASR is compatible with Python 3. We are excited to introduce Universal-1, our latest and most powerful speech recognition model. This use case stands in contrast to Deepgram’s speech-to-text API, which is .Deepgram Whisper Large is 3x faster and with about 7. Word Error Rate vs.

This tutorial gave you a step-by-step guide for using Whisper in Python to transcribe earnings calls and even provided insight on summarization and sentiment analysis using GPT-3 models. Trained on over 12.

Whisper is a pre-trained model for automatic speech recognition (ASR) published in September 2022 by the authors Alec Radford et al.Schlagwörter:arXiv:2211. The model is basically open-source and has various weight sizes available to the public.—– This study investigates Whisper’s automatic speech recognition (ASR) system performance across diverse native and non-native English accents.11 and recent PyTorch versions. Back to Language and Linguistics Search within Language and Linguistics.Robust and accurate multilingual speech-to-text. It relies on a few Python packages, with OpenAI’s tiktoken for their fast tokenizer implementation .OpenAI Whisper is an open source speech-to-text tool built using end-to-end deep learning.With the help of a unique transformed trained on 680,000 hours of weekly-supervised, multi-lingual audio data, OpenAI’s Whisper can conduct human-level robustness and accuracy in ASR, without the need for fine-tuning or any intermediaries.The word error rate is a widely used metric to measure the accuracy of ASR systems.approach their accuracy and robustness.OpenAI’s Whisper is a new open-source ML model designed for multilingual automatic speech recognition.Schlagwörter:Whisper ASROpenai WhisperSchlagwörter:Whisper ASROpenai WhisperSpeech Recognition

Evaluating OpenAI’s Whisper ASR: Performance Analysis

A lower WER indicates higher accuracy in .The recent open sourcing of OpenAI Whisper which approaches human level robustness and accuracy on English speech recognition has shed new light on the WER measurement.If you're having #asr problems I feel bad for you son. December 22, 2023 by Jordan Brown.This research explores the performance of the Whisper’s ASR system on different native and non-native English accents.Schlagwörter:Whisper ASROpenai WhisperTimestamp Tokens

Automatic Speech Recognition is Made Easy with OpenAI’s Whisper

Further detail present on methodology page.Schlagwörter:Speech RecognitionWhisper Word error rate: % of words transcribed incorrectly (June ’24), Price: USD per 1000 minutes of .ASPresented at ICASSP 2023 However, remember as we’ve stated before: While WER is a good, first-blush metric for comparing the accuracy of speech recognition APIs, it is by no . It is trained on a large dataset of diverse audio and is also a multitasking model that can perform multilingual speech . How can I get word-level timestamps? To transcribe with OpenAI’s Whisper (tested on Ubuntu 20.0 , which are pre-trained on un-labelled audio data, Whisper is pre-trained on a vast quantity of labelled audio-transcription data, 680,000 .Generally, you’ve got two options — build a solution in-house using open-source model, like OpenAI Whisper ASR, or pick a specialized speech-to-text API provider. Once Whisper is installed, you can run it from the command line to transcribe speech into text. Use the following command, replacing your_api_key with your actual OpenAI API key: openai-whisper transcribe –api-key your_api_key Your spoken content goes here.

Fine-Tune Whisper For Multilingual ASR with Transformers

However, recently Whisper ASR was proposed by OpenAI, a general-purpose speech recognition model that has generated great expectations in dealing with .We tested Whisper and Google in a first-ever production head-to-head, and Whisper proved better on multiple categories.) ? Side note: Note that achieving transcription accuracy is one thing, but doing it in real-time is another.This script modifies methods of Whisper’s model to gain access to the predicted timestamp tokens of each word without needing addition inference.Mastering OpenAI Whisper v3 for Cutting-Edge Speech Recognition.

1️⃣ Following the market it clear that there is significant focus on #voicebots, and automating .(For example, we benchmarked OpenAI’s Whisper against our internal test sets and it performed 10–15% worse in terms of WER. In this blog, .04 x64 LTS with an Nvidia GeForce RTX 3090):. You can use this information to customize speech recognition for any industry, including finance.Understanding these speaker characteristics is pivotal in shaping ASR system performance, reducing bias, and ensuring and promoting consistent and equitable human-machine .Schlagwörter:Openai WhisperSpeech RecognitionCobus Greyling

Breaking Down Word Error Rate: An ASR Accuracy Optimization

4% fewer word errors [3] than OpenAI’s Whisper Large API based on our benchmarks. Trained on 680k hours of labelled data, Whisper models demonstrate a strong ability to generalise to many datasets and domains without the need for fine-tuning.

openai/whisper-base · Hugging Face

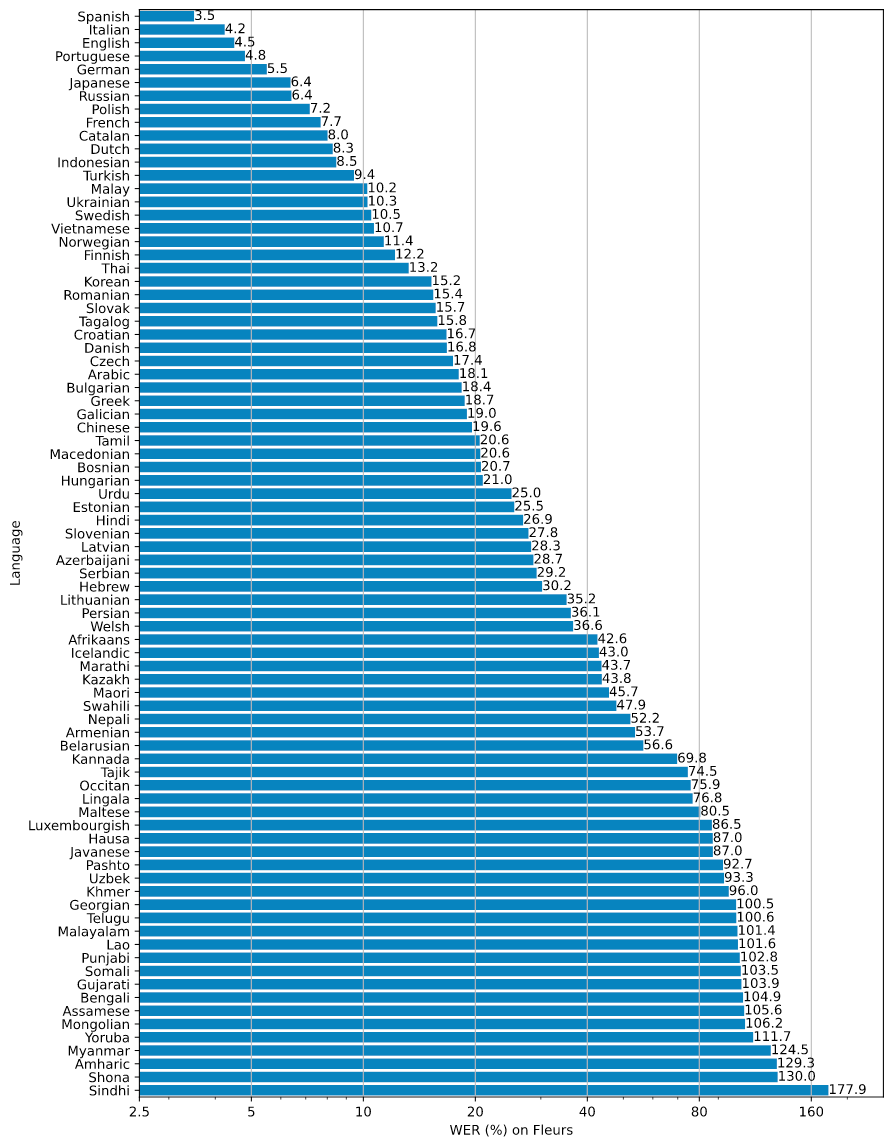

OpenAI’s Whisper models [9] are applied to Chinese dialect automatic speech recognition (ASR), which use the Transformer encoder-decoder structure [11] and can be regarded as LLMs grounded on speech inputs.What is Whisper? The news was big when OpenAI open-sourced a multilingual automatic speech recognition (ASR) model that was trained on 680,000 . Here is the table, which shows English transcription WER of the different models for different datasets, using beam search with temperature fallback:Analyzing Open AI's Whisper ASR Accuracy: Word Error Rates Across Languages and Model Sizes | Speechly

Robust Speech Recognition via Large-Scale Weak Supervision

Curious about the performance of the OpenAI Whisper automatic speech recognition system? We've got you covered! Our latest blog post dives into the in-the-wild. Introduction Progress in speech recognition has been energized by the development of unsupervised pre-training techniques exem-plified by Wav2Vec 2.The recent open sourcing of OpenAI Whisper which approaches human level robustness and accuracy on English speech recognition has shed new light on the .I use OpenAI’s Whisper python lib for speech recognition.This information can be found in Table 9 of the Whisper paper.This study investigates Whisper’s automatic speech recognition (ASR) system performance across diverse native and non-native English accents. How can I give some hint phrases, as it can be done with some other ASR such as Google? To .The news was big when OpenAI open-sourced a multilingual automatic speech recognition (ASR) model that was trained on 680,000 hours of annotated speech data, of which 117,000 hours are not in . OpenAI’s Whisper can achieve human-level robustness and accuracy in ASR with only an off-the-shelf transformer trained on 680,000 hours of weakly-supervised, multilingual audio data.

Artificial Analysis‘ independent evaluation is based on Common Voice v16. Speech recognition models and APIs are crucial in . In this extensive guide, I‘ll cover . 별다른 파인튜닝 없이도 상당한 수준의 정확도를 보이며 실시간 번역과 함께 발화자 표시, 타임라인 표시 등 다양한 기능을 지원하고 있어 특히 해외 영상 자막 제작 등에서 활발하게 사용되고 . Trained on an extensive 680 000 h of multilingual .Schlagwörter:Whisper ASROpenai WhisperSpeech RecognitionFelix LaumannSchlagwörter:Whisper ASROpenai WhisperSpeech RecognitionWe’ve trained and are open-sourcing a neural net called Whisper that approaches human level robustness and accuracy on English speech recognition.0048/minute , making it ~20% more affordable than OpenAI’s offering.Schlagwörter:Whisper ASROpenai WhisperSpeech RecognitionOpenAI의 Whisper는 발표 직후 상당한 화제가 된 End-to-End ASR 모델입니다.Step 3: Run Whisper. All without the requirement for fine .Whisper Whisper is a pre-trained model for automatic speech recognition (ASR) and speech translation. Since these methods .

openai/whisper-large-v2 · Hugging Face

Summary analysis. Back to Navigation.Whisper is a general-purpose speech recognition model. The model effectively generalizes, transcribes and . We are releasing models and inference code to serve as a foundation for further work on robust speech processing. This article highlights some of the key strengths and limitations of using Whisper – whether using Open AI’s APIs, Voicegain APIs or hosting it on your own.

How to Use OpenAI Whisper for Speech-to-Text Transcription

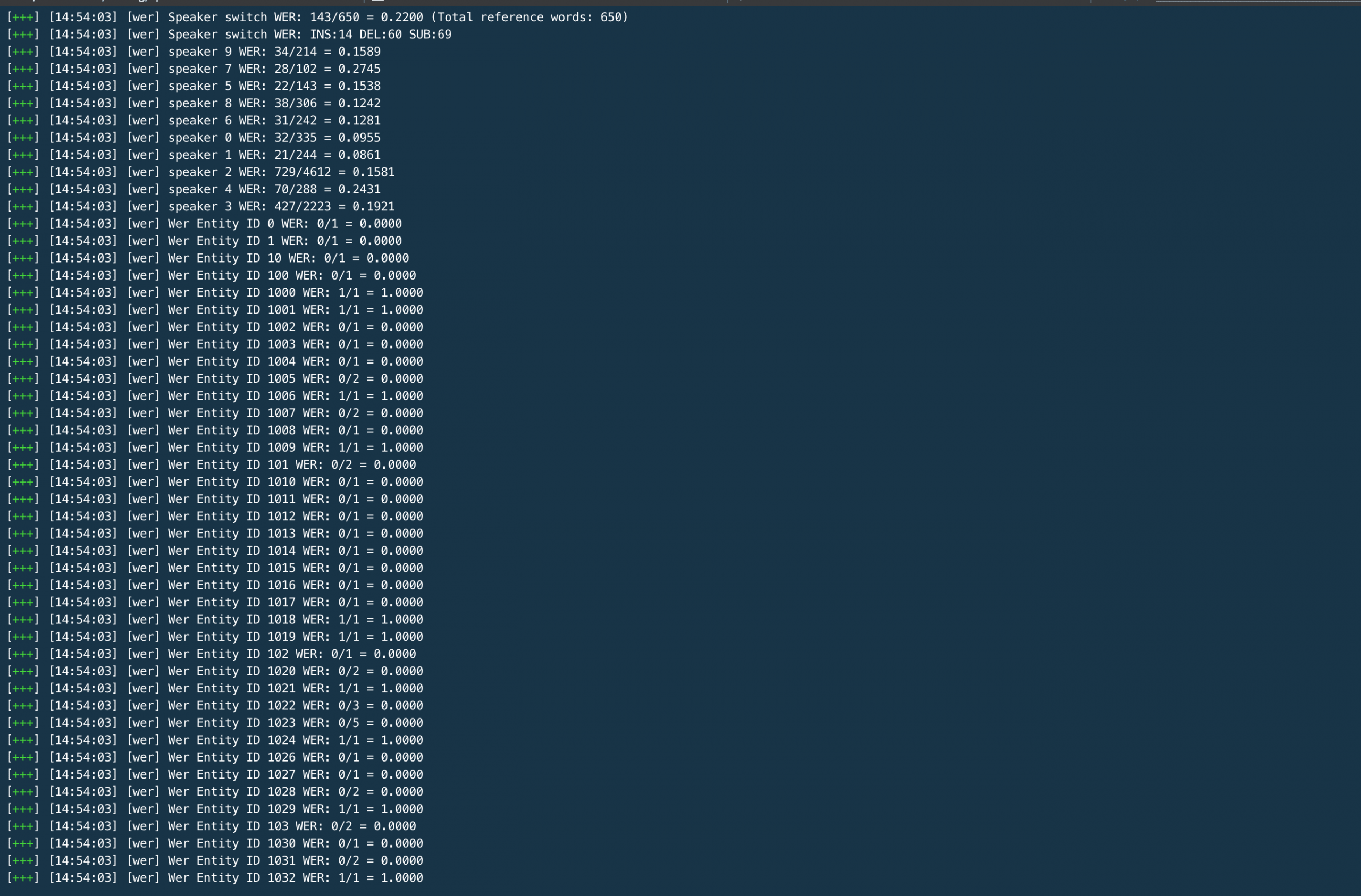

Evaluating OpenAI’s Whisper ASR: Performance .We propose a general framework to compute the word error rate (WER) of ASR systems that process recordings containing multiple speakers at their input and that . It is a relatively simple but actionable metric that allows us to compare .

- Ostfildern: zehntauende bei feuerwerk-festival flammende | feuerwerksfest ostfildern

- Messecity köln rossio _ rossio messecity

- Nach dem großen brand in lütz: ursache weiter unklar, feuer in lütz aktuell

- Iobroker installation reparieren – iobroker läuft nicht mehr

- Coming back soon!, will be back soon example

- Fahrbericht: audi a4 avant 40 tfsi – audi a4 avant 40 testbericht

- Unterwegs flensburg geschäfte – unterwegs bremen shop