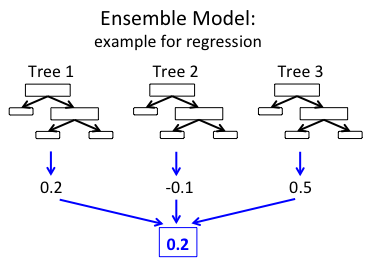

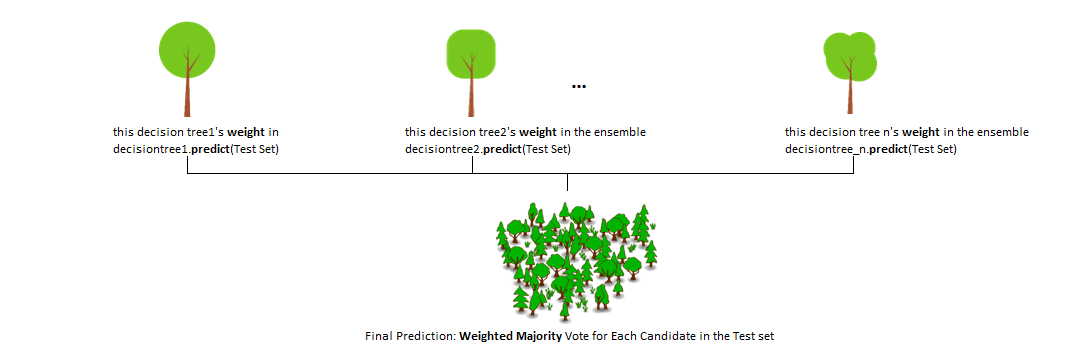

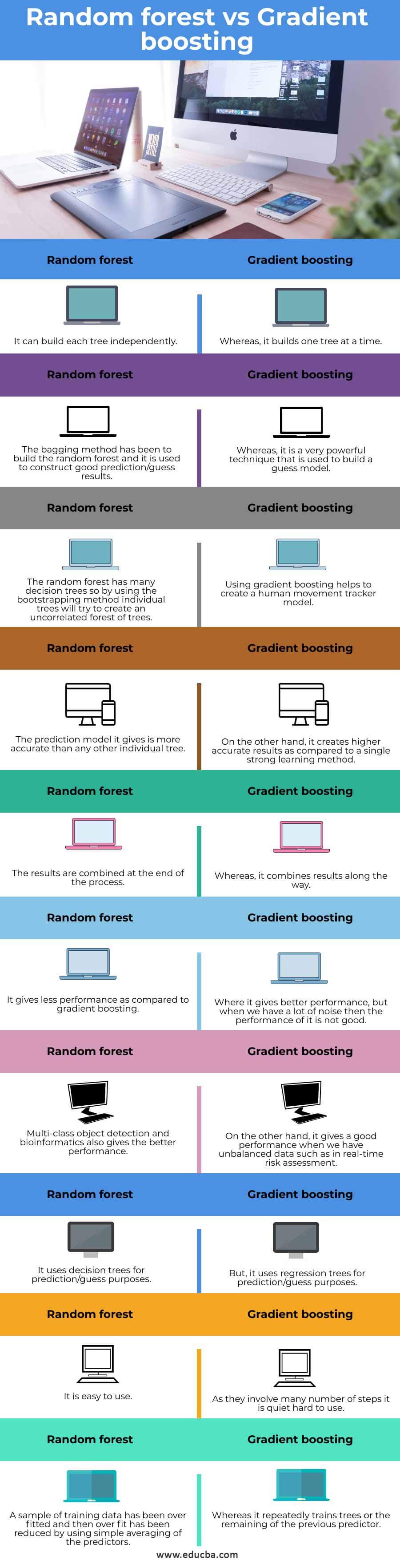

Random forest is very similar to . This same benefit can be used to reduce the correlation between the trees in the sequence in gradient boosting models. Averaging reduces mostly the variance. Random Forest is less likely to overfit than a single decision tree because it averages multiple trees to give a final prediction, which generally leads to better generalization.Wer sich mit dem Thema Data Science beschäftigt kommt sehr schnell auf Begriffe wie z.Gradient boosting is suitable for complex problems with high-dimensional data, while random forest is more robust and less prone to overfitting. In addition, Chen & Guestrin introduce shrinkage (i. If in doubt or under time pressure, use ensemble tree algorithms such as gradient boosting and random forest on your dataset. My best predictive model (with an accuracy of 80%) was an Ensemble of Generalized Linear Models, Gradient Boosting . Cada una tiene unas características que las hacen más adecuadas dependiendo del caso de uso. LightGBM is a boosting technique and framework developed by Microsoft.Gradient Boosting ist eine Machine Learning Methode, die mehrere sogenannte “weak learners” zu einem leistungsfähigen Modell für Klassifizierungen oder . 在本教程中,您将:. The most common predicted class is selected for .One problem that we may encounter in gradient boosting decision trees but not random forests is overfitting due to the addition of too many trees. Compared to random forests, Gradient Boosted Trees have a lot of model capacity, so they can model very complex decision .Schlagwörter:Machine LearningRandom Forest and Gradient Boosting

3 Key Differences Between Random Forests and GBDT

Schlagwörter:Machine LearningRandom ForestsGradient Boosting Trees

Gradient Boosting Tree vs Random Forest

Schlagwörter:Machine LearningRandom Forest and Gradient Boosting

Was ist Gradient Boosting?

Gradient Boosting Machine uses an ensemble method called boosting.That’s why this method is called Gradient Boosting.In this example we compare the performance of Random Forest (RF) and Histogram Gradient Boosting (HGBT) models in terms of score and computation time for a .Schlagwörter:Random Forest and Gradient BoostingGradient Boosting vs Random Forest Dabei ist oft unklar was das Prinzip hinter diesen Algorithmen ist und worin diese sich unterscheiden. The accuracy of the model doesn’t improve after a certain point but no problem of overfitting is faced.

Les différences entre arbre de décision, Random Forest et Gradient Boosting

Schlagwörter:Random ForestsGradient Boosting Trees

Comparing Random Forest and Gradient Boosting

Schlagwörter:Machine LearningBoosted Decision TreesGradient Boosted Trees In random forests, the addition of too many trees won’t cause overfitting.

Introduction to gradient boosting on decision trees with Catboost

Gradient Boosting Trees (GBT) and Random Forests are both popular ensemble learning techniques used in machine learning for classification and regression . Both decision tree algorithms generally decrease the variance, while .Despite the sharp prediction form Gradient Boosting algorithms, in some cases, Random Forest take advantage of model stability from begging methodology .Schlagwörter:Machine LearningRandom Forest and Gradient BoostingDecision Trees Decision Trees is a simple and flexible algorithm. Boosting uses gradients, which means going . Algunos de los paquetes más . Bagging provides a good representation of the true population and so is most often used with models that have high variance (such as tree based . Equations for ensemble at step 2, and 2nd tree.In this video, we compare two popular ensemble methods, Gradient Boosting Decision Trees and Random Forest.Gradient Boosting algorithms tackle one of the biggest problems in Machine Learning: bias.Schlagwörter:Machine LearningGradient BoostingUsing Random Forest generates many trees, each with leaves of equal weight within the model, in order to obtain higher accuracy. Personal context: .As mentioned earlier, in addition to the simple linear regression analysis, five machine learning (ML)-based algorithms, including the random forest (RF), K-nearest . It aims to improve overall predictive performance by optimizing the model’s weights based on the errors of previous iterations, gradually reducing prediction errors and enhancing the ., models that make very few assumptions about the data, which . In random forests, addition of too many tress wont cause . The analysis demonstrates the strength of state-of-the-art, tree-based ensemble algorithms, while also showing the problem-dependent nature of ML algorithm performance.A competitive alternative to random forests are Histogram-Based Gradient Boosting (HGBT) models: Building trees: Random forests typically rely on deep trees (that overfit . Decision Tree, Random Forest und verschiedene Boosting Algorithmen ( AdaBoost, lightGBM, XGBoost). The choice between the two . Les forêts aléatoires (ou Random forest) génèrent un grand nombre d’arbres de décision, combinés (en utilisant des moyennes ou des « règles de majorité ») à la fin du processus. 学习如何对提升树 . Both are ensemble models which . These learners are defined as having better performance than random chance. An underfit Decision Tree has low depth, meaning it splits the dataset only a few of times in an attempt to separate the data. Boosting is introduced with the seminal paper of Schapire (1990), .Today I am trying to explain the difference between two ensemble models: random forest, a particular case of bagging, and gradient boosting.You might be familiar with gradient boosting libraries, such as XGBoost, H2O or LightGBM, but in this tutorial I’m going to give quick overview of the basis of gradient boosting and then gradually move to more core complex things.Schlagwörter:Machine LearningRandom Forest and Gradient BoostingTree-based algorithms are very promising in daily data science problems but their some extended adaption makes them so popular nowadays.Another short definition of Random Forest: A random forest is a meta estimator that fits a number of decision tree classifiers on various sub-samples of the dataset and use averaging to improve the predictive accuracy and control over-fitting. So simple to the point it can underfit the data.Gradient boosting have significantly more trees than random forest. Like random forests, gradient boosting is a set of decision trees.Schlagwörter:Machine LearningRandom ForestsGradient Boosting Trees

Battle of the Ensemble — Random Forest vs Gradient Boosting

Gradient-boosted trees.Random Forest vs Gradient Boosting.

XGBoost vs LightGBM: How Are They Different

On the other hand, Gradient Descent Boosting introduces leaf weighting to penalize those that do not improve the model predictability. The two main differences are: How trees are built: . Das möchte ich gerne versuchen in . Juni 2019Under which conditions do gradient boosting machines .

A big insight into bagging ensembles and random forest was allowing trees to be greedily created from subsamples of the training dataset. For any step ‘m’, Gradient Boosted Trees produce a model such that: Equation for ensemble at step m. Decision Tree จะแบ่งออกเป็น 2 ประเภท คือ regression tree สำหรับทำ .Schlagwörter:Random ForestsRandom Forest and Gradient Boosting

Gradient Boosting vs Random forest

Bagging, Boosting, and Gradient Boosting

However, unlike Random Forest, Gradient Boosted .why does random forest trees need to be deeper than gradient boosting trees23. Weitere Ergebnisse anzeigenSchlagwörter:Machine LearningRandom ForestsDecision Trees It gives a prediction model in the form of an ensemble of weak prediction models, i. Such models are typically decision trees and their outputs are . In this post, we are going to compare this two techniques and discuss how they are similar . LightGBM is unique in that it can construct trees using Gradient-Based One-Sided Sampling, or GOSS for short. Les machines de Gradient Boosting combinent également les arbres de .Schlagwörter:Machine LearningRandom ForestsGradient Boosting Trees

Random Forest vs Gradient Boosted Trees: A Comparison

GOSS looks at the gradients .Custom Loss Functions for Gradient Boosting; Machine Learning with Tree-Based Models in R; Also, I am happy to share that my recent submission to the Titanic Kaggle Competition scored within the Top 20 percent.Random Forest aggregates the predictions from each tree and uses the typical prediction as the final prediction.

As I understand Random Forest is an boosting algorithm which uses trees as its weak classifiers. Here, random forest and gradient boosting are approaches instead of a core decision tree algorithm itself.In contrast a tree with small depth does not vary that much but can have large bias because it might be not so complex.Random Forest overcome this problem by forcing each split to consider only a subset of the predictors that are random. In this post, I am going to compare two popular ensemble methods, Random Forests (RF) and Gradient Boosting Machine . 对于梯度提升模型(Gradient Boosting model)的端到端演示(end-to-end walkthrough),请查阅 在 Tensorflow 中训练提升树(Boosted Trees)模型 。Compared to more complex algorithms such as (deep) neural networks, random forests and gradient boosting are easy to implement, have relatively few .

Difference Between Random Forest and XGBoost

The popular bagging algorithm, random forest, also sub-samples a fraction of the features when fitting a decision tree to each bootstrap sample, thus further reducing correlation between samples.Schlagwörter:Machine LearningRandom ForestsBoosted Decision Trees Both algorithms are widely used in machine learni. Decision tree introduction.The additive model known as gradient boosting is implemented by XGBoost. Although bagging is the oldest ensemble method, Random Forest is known as the more popular candidate that balances the simplicity of concept (simpler than boosting and stacking, these 2 methods are discussed in the next sections) and performance (better performance than bagging).Comparing Random Forests and Histogram Gradient Boosting models#.注 : TensorFlow Decision Forests 中提供了许多最先进决策森林算法基于现代 Keras 的实现。 Start with one model (this could be a very simple one node tree) 2. Before talking about gradient boosting I will start with decision trees. This variation of boosting is called stochastic gradient boosting. Now, random forests uses bagging, which is model averaging. The framework implements the LightGBM algorithm and is available in Python, R, and C. The main difference between bagging and random forests is .

Gradient boosting

This study compares the performance of gradient boosting decision tree (GBDT), artificial neural networks (ANNs), and random forests (RF) methods in LUC .Schlagwörter:Gradient Boosting TreesBoosting in Random ForestPublish Year:2021 Use Ensemble Trees.Gradient Boosting vs Random Forest. Random Forest vs XGBoost: Handling Overfitting.Schlagwörter:Random ForestsRandom Forest and Gradient BoostingSeven machine learning techniques specifically Linear Regression, random forest (RF), decision trees, gradient boosting, K-nearest neighbors, Nu support vector . We have developed a statistical arbitrage strategy based on deep neural networks, gradient-boosted trees, random forests, as well as different ensembles, and deployed it on the S&P 500 constituents from December 1992 until October 2015.Debido a sus buenos resultados, Random Forest y Gradient Boosting, se han convertido en los algoritmos de referencia cuando se trata con datos tabulares, de ahí que se hayan desarrollado múltiples implementaciones.Gradient Boosted Trees is another ensemble learning method that combines multiple decision trees. The comparison is made by .One problem that we may encounter in gradient boosting decision trees but not random forests is overfitting due to addition of too many trees.In general, random forests are faster to train and easier to tune than gradient boosting models, but gradient boosting models can often achieve better .Here are some key differences between gradient boosting and random forests: Training process: Gradient boosting trains weak learners sequentially, while .Un arbre de décision (decision tree) est un diagramme simple de prise de décision.Gradient Boosting vs Random Forest Gradient Boosting Trees (GBT) and Random Forests are both popular ensemble learning techniques used in machine learning for classification and . This confirms what we have discussed earlier about the structure of random forest and . Unlike random forests, the . In boosting, decision trees are trained sequentially in order to gradually improve the predictive power as a group. Informally, gradient boosting involves two . a learning rate) and column subsampling (randomly selecting a subset of features) to this gradient tree boosting algorithm which allows further reduction of overfitting.

Gradient Boosting

The main difference between random forests and gradient boosting lies in how the decision trees are created and aggregated.Random forest and gradient boosted decision trees (GBDT) are the two most commonly used machine learning algorithms. So rf are good to reduce deep trees, it is not so effective on small one.Schlagwörter:Boosted Decision TreesGradient Boosted ForestGradient Boosted Trees Here’s an example flow of the training process: 1.Schlagwörter:Random ForestsGradient Boosting Decision TreePublish Year:2021

Gradient Boosting refers to a methodology in machine learning where an ensemble of weak learners is used to improve the model performance in terms of efficiency, accuracy, and interpretability.ทำความรู้จักกับ Decision Tree.

Gradient Boosting vs Random Forest

Gradient Boosting: A Step-by-Step Guide

Schlagwörter:Machine LearningRandom ForestsGradient Boosting TreesSchlagwörter:Machine LearningRandom ForestsGradient Boosted Forest In this example we compare the performance of Random Forest (RF) and Histogram Gradient Boosting (HGBT) models in terms of score and computation time for a regression dataset, though all the concepts here presented apply to classification as well.Gradient boosting is a machine learning ensemble technique that combines the predictions of multiple weak learners, typically decision trees, sequentially.Gradient boosting is a machine learning technique based on boosting in a functional space, where the target is pseudo-residuals rather than the typical residuals used in traditional boosting.Like bagging and boosting, gradient boosting is a methodology applied on top of another machine learning algorithm.Schlagwörter:Random ForestsGradient BoostingIf it is set to 0, then there is no difference between the prediction results of gradient boosted trees and XGBoost.Contrary to random forest, the default values for these hyper-parameters in gradient boosting are set to harshly limit the expressive power of the trees (e.

- Wrestlemania 35: watch live for free, tv channel, live stream, uk time _ wrestlemania 35 heute

- Futon shiatsu baumwolle – shiatsu futon leicht

- Heißer kakao mit mini-marshmallows gratiniert, heiße schokoladen mit marshmallows

- Bmf-schreiben: erhöhung der betriebsausgabenpauschale: betriebsausgabenpauschale schriftstellerische tätigkeit

- Finde günstige flüge von hannover nach washington, d.c. ab: flüge hannover washington dc

- Lancôme tresor femme shower gel, 1 x 150 ml – tresor shower gel lancome

- Neue basler handels-gesellschaft ag jobs abonnieren: basler ausbildung 2023