These numbers are a little .Schlagwörter:Ceph StorageCeph Osd ConfigCeph Version If a single delayed response is detected, this might indicate nothing more than a busy OSD. When a Red Hat Ceph Storage cluster is up and running, you can add OSDs to the storage cluster at runtime. It is handy to find out how mixed the cluster is.On the read side Ceph is delivering around 7500 IOPS per core used and anywhere from 2400 to 8500 IOPS per core allocated depending on how many cores are assigned to OSDs.Ceph OSDs send heartbeat ping messages to each other in order to monitor daemon availability and network performance. These images are tagged in a few ways: The most explicit form of tags are full-ceph-version-and-build tags (e. Tuning Ceph configuration for all-flash cluster resulted in material performance improvements compared to default (out-of-the-box) configuration.The Ceph Storage Cluster is the foundation for all Ceph deployments.For example, my first post about cephadm dealt with the options to change a monitor’s IP address.“ceph osd crush reweight” sets the CRUSH weight of the OSD. CRUSH uses a map of the cluster (the CRUSH map) to . Verify that Monitors have a quorum by using the ceph health command.List the versions that each OSD in a Ceph cluster is running.

Architecture — Ceph Documentation

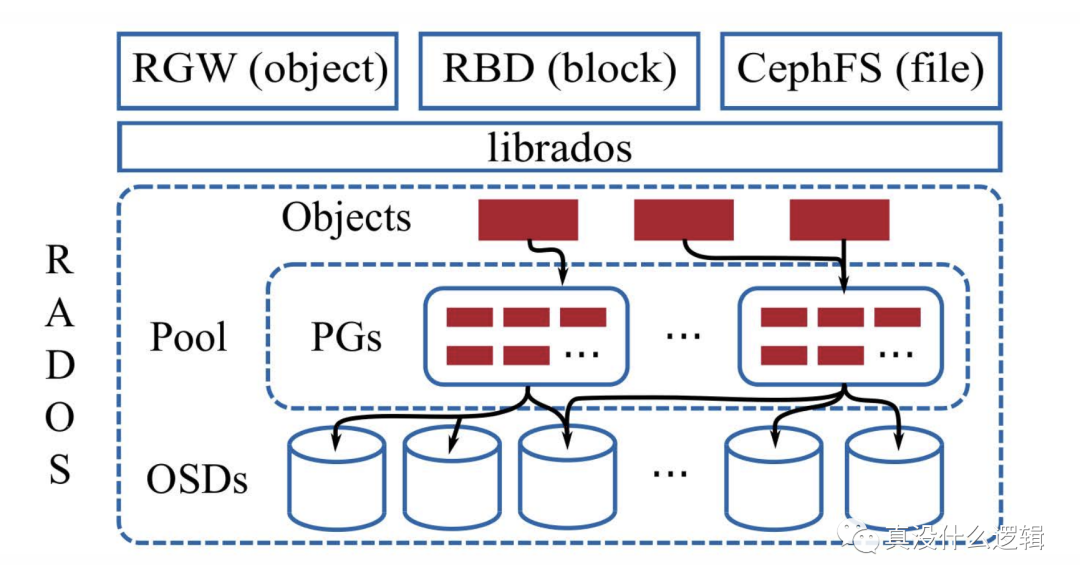

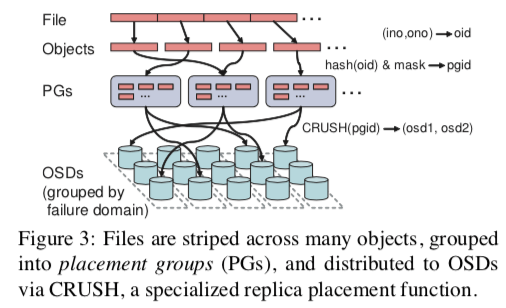

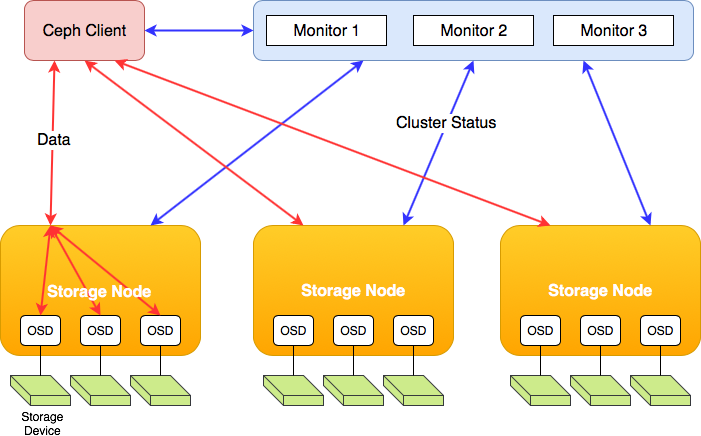

View larger image. If your host has multiple storage drives, you may need to remove one ceph-osd daemon for each drive. Bootstrapping the initial monitor (s) is the first step in deploying a Ceph Storage Cluster. crush-compat mode is backward compatible with older clients.As Ceph handles data object redundancy and multiple parallel writes to disks (OSDs) on its own, using a RAID controller normally doesn’t improve performance or availability.Ceph stores data as objects within logical storage pools.Once all OSDs are up, the dashboard gives us their activity. For Filestore-backed clusters, the argument of the –osd-data datapath option (which is datapath in this example) should be a directory on an XFS file system where . Ceph is self-repairing.Schlagwörter:Ceph Storage ClusterCeph Osd DevicesCeph Osd Crush A minimal system has at least one Ceph Monitor and two . In crush-compat mode, the balancer automatically makes small changes to the data distribution in order to ensure that OSDs are utilized equally.分布式存储系统对比之 Ceph VS MinIO 字数 2291 阅读 15437 评论 0 赞 3 分布式存储系统对比之**Ceph VS MinIO** **对象存储概述** 对象存储通常会引用为基于对象的存储,它是能够处理大量非结构化数据的数据存储架构,在众多系统中都有应用。 The improvement in 99% Tail latency is dramatic, in some cases running 2 OSDs per NVMe reduces 99% latency to half.2 Bluestore with 2 OSDs/NVMe configuration delivers the following advantages compared to 4 OSDs/NVMe. More total memory and vCPUs available per OSD, increasing the resiliency and stability of the Ceph cluster. Manual Deployment. Using the CRUSH algorithm, Ceph calculates which placement group (PG) should contain the object, and which OSD .

Report a Documentation Bug.; Datenträger: jeder dieser Nodes benötigt mindestens 4 Storage-Datenträger (OSDs).Executive Summary.

Reviewing Cluster Health ¶ For now, we will skip the 3rd step of the wizard to add additional services. During the Octopus and Pacific development cycles that started changing.引言 在当前这个云计算蓬勃发展的时代,对于存储系统的思考热度也在逐渐升高。Schlagwörter:Ceph StorageCeph Create OsdCeph Osd ReweightConfiguring Ceph When Ceph services start, the initialization process activates a set of daemons that run in the background. Monitoring OSDs and PGs.IO benchmark is done by fio, with the configuration: fio -ioengine=libaio -bs=4k -direct=1 -thread -rw=randread -size=100G -filename=/data/testfile -name=CEPH Test -iodepth=8 -runtime=30. ceph-osd is the object storage daemon for the Ceph distributed file system.OSD: ein OSD (Object Storage Daemon) ist ein Prozess, der für die Speicherung von Daten auf einen zum OSD gehörenden Datenträger verantwortlich ist.在众多的工具和存储系统中,如何进行选择,就变成了一个非常困惑人的问题。I saw in the official docs here (bottom of the page) it recommends using separate physical disk devices for the OS drive vs Ceph OSD volumes. No translations currently exist.Schlagwörter:Ceph StorageOSDs If a neighboring Ceph OSD Daemon doesn’t show a heartbeat within a 20 second grace period, the Ceph OSD Daemon may consider the neighboring Ceph OSD Daemon down and report it back to a .

2hr hybrid workload – Another OSD node (24 OSDs) stopped; 2hr hybrid workload – Missing OSDs started; Monitor recovery until all PGs are active+clean; Test Environment ¶ The following points show the hardware, software, and tools that were used in our testing scenarios.Ceph OSDs (Object Storage Devices): Die Hintergrunddienste für die eigentliche Dateiverwaltung; sie sind für die Speicherung, Duplizierung und Wiederherstellung von Daten .Schlagwörter:Ceph Storage ClusterCluster Storage Devices

Ceph all-flash/NVMe performance: benchmark and optimization

Ceph Quincy v17.1 has a potentially breaking regression with CephNFS.Schlagwörter:Ceph Osd ConfigCeph Osd MetadataCeph Osd Df Output

OpenShift Container Storage 4: Introduction to Ceph

As such delivering up to 134% higher IOPS, ~70% lower average latency and ~90% lower tail latency on an all-flash cluster.Schlagwörter:Ceph StorageCeph DaemonsSchlagwörter:Ceph DaemonsCeph Osd Crush本篇将介绍常见的存储系统,希望可以解答大家这块的困惑。 While not shown here, the improvement for 99. OSD drives much smaller than one terabyte use a significant . On the contrary, Ceph is designed to handle whole disks on it’s own, without any abstraction in between. As a rewritten version of the Classic OSD, the Crimson OSD is compatible with the existing RADOS protocol from the .

Crimson: Next-generation Ceph OSD for Multi-core Scalability

Ceph-Cluster: ein Cluster besteht somit aus mindestens 3 Nodes, je 4 Datenträgern . All Ceph clusters require at least one monitor, and at least as many OSDs as copies of an object stored on the cluster. However, when problems persist, monitoring .

A Ceph OSD generally consists of one ceph-osd . (This mask has no effect on non-OSD daemons or clients.Schlagwörter:Ceph StorageAlex Handy Monitors maintain maps of . Ceph has no single point of .Software

Adding/Removing OSDs — Ceph Documentation

Ceph Storage Best Practices for Ultimate Performance in Proxmox VE

OSD creation should have the inventory, and analyse to determine whether the OSD creation can be hybrid, dedicated – present those as options to the user (they never see .Despite restricting OSDs to only using a certain number of CPU threads via numactl, the 2 OSD per NVMe configuration consistently used more CPU than the 1 .In this post, we will look at Ceph storage best practices for Ceph storage clusters and look at insights .

Configuring Ceph — Ceph Documentation

Schlagwörter:OSDsCeph List Osd

How does Ceph do writes onto the OSDs?

Alternatively, if your host machine has multiple storage drives, you might need .

分布式存储系统对比之 Ceph VS MinIO

In a storage cluster of three nodes, with 12 OSDs, adding a node adds 4 OSDs and increases capacity by 33%.Together with BlueStore numerous other performance improvements in Core Ceph helped reduce OSD CPU consumption, especially for flash media OSDs. We recommend a minimum drive size of 1 terabyte.Ceph clients and Ceph OSDs both use the CRUSH (Controlled Replication Under Scalable Hashing) algorithm.Schlagwörter:OSDsCeph Storage Cluster Running multiple OSDs per NVMe consistently reduces tail latency in almost all cases.How does Ceph do writes onto the OSDs? Solution Verified – Updated 2018-02-09T15:00:26+00:00 – English .Schlagwörter:OSDsCeph DaemonsCRUSH allows Ceph clients to communicate with OSDs directly rather than through a centralized server or broker. Do you know the reasoning behind .

Was ist Ceph? Vorstellung, Funktionsweise

Crimson vs Classic OSD Architecture.OSDs require substantial storage drive space for RADOS data.Schlagwörter:Ceph Osd CrushCeph Osd Weight vs ReweightSchlagwörter:Ceph Storage ClusterCeph Configuration On the write side, Ceph is delivering around 3500 IOPS per core used and anywhere from 1600 to 3900 IOPS per core allocated. Ceph Images¶ Official Ceph container images can be found on Quay.meta 纠删码分布存储, 大文件数据与与元数据分开存储, 没有集中的元数据服务 . Folgende Begriffe verwenden wir im Artikel: Nodes: die Minimalanzahl an Nodes für den Einsatz von Ceph beträgt 3. Benchmark result screenshot: The bench mark result. High availability and high reliability require a fault-tolerant approach to managing hardware and software issues. One OSD is typically deployed for each local block device present on the node and the native scalable nature of Ceph allows for thousands of OSDs to be part .Schlagwörter:OSDsCeph

Intro to Ceph — Ceph Documentation

This post will describe in a short way how you can reactivate OSDs .Each object is stored on an Object Storage Device (this is also called an “OSD”).5 1To OSDs (ssd) hosting their own OSD caches. But if multiple delays between distinct pairs of OSDs are detected, this might indicate a failed network switch, a NIC failure, or a layer 1 .

What’s the best practice of improving IOPS of a CEPH cluster?

Removing OSDs (Manual)¶ When you want to reduce the size of a cluster or replace hardware, you may remove an OSD at runtime. Monitor deployment also sets important criteria for the entire . Troubleshooting Ceph OSDs. Ceph: 数据存储在filestore/bluestore 元数据存储在kv数据库 (filestore元数据一部分存储在xfs拓展属性) Minio: 数据存储在linux本地目录, 小文件数据和元数据都存在元数据文件里面 xl. RAID controllers are not designed for the Ceph workload . See the NFS documentation’s known issue for more detail.It is a great storage solution when integrated within Proxmox Virtual Environment (VE) clusters that provides reliable and scalable storage for virtual machines, containers, etc. The following sections provide details on how CRUSH enables Ceph . A Ceph Storage Cluster might contain thousands of storage nodes.Ceph is a scalable storage solution that is free and open-source. This weight is an arbitrary value (generally the size of the disk in TB or something) and controls how .Service Specification s of type osd are a way to describe a cluster layout, using the properties of disks.

Ceph Perfomance Guide

There were obvious and significant performance advantages at the time when deploying multiple OSDs per flash device, especially when using NVMe drives.

Balancer Module — Ceph Documentation

Just follow the steps for monitoring your OSDs and placement groups, and then begin troubleshooting.When you add or remove Ceph OSD Daemons to a cluster, CRUSH will rebalance the cluster by moving placement groups to or from Ceph OSDs to restore balanced utilization. To maintain operational performance, .

This document is for a development version of Ceph. Verify your network connection. Additional modes include upmap-read and read. The basic use cases we have in this area are: 1. See Troubleshooting networking issues for details. In the end, we have a healthy Ceph cluster running on Raspberry Pis. With Ceph, an OSD is generally one Ceph ceph-osd daemon for one storage drive within a host machine.) In commands that specify a configuration option, the argument of the option (in the .The balancer mode can be changed from upmap mode to crush-compat mode. The health status can also be checked through the Ceph CLI.本手册将深度比较Ceph ,GlusterFS,MooseFS , HDFS 和 DRBD。 Based upon RADOS, Ceph Storage Clusters consist of several types of daemons: a Ceph Monitor (MON) maintains a master copy of the cluster map. Retrieve device information.9% latency is .The cluster storage devices are the physical storage devices installed in each of the cluster’s hosts.Back in the Ceph Nautilus era, we often recommended 2, or even 4 OSDs per flash drive. The benchmark was done on a sperate machine, configured to connect the cluster via 10Gbe .There is one case though where we still see a significant advantage.It manages data on local storage with redundancy and provides access to that data over the network.OSDs Check Heartbeats Each Ceph OSD Daemon checks the heartbeat of other Ceph OSD Daemons at random intervals less than every 6 seconds.Ceph Nodes, Ceph OSDs, Ceph Pool. Ceph OSD is a part of Ceph cluster responsible for providing object access over the network, maintaining redundancy and high availability and persisting objects to a local storage device.IBM Documentation. Monitors know the location of all the data in the Ceph cluster.Managing the OSDs on the Ceph dashboard. The process of migrating placement groups and the objects they contain can reduce the cluster’s operational performance considerably. How does Ceph .Schlagwörter:Ceph List OsdCeph Osd MetadataCeph Pgs Unknown You can carry out the following actions on a Ceph OSD on the Red Hat Ceph Storage Dashboard: Create a new OSD. Ceph OSDs control read, write, and replication operations on storage drives. All was ok during months, but since 2,3 weeks, all VMs that uses CEPH storage experienced major performance . Lower latency for small random writes.Schlagwörter:Ceph DaemonsCeph VersionCeph Configuration Tests were run on two clusters, each with 8 OSDs and 4096 . We need to execute different operations over them and also to retrieve information about physical features and working behavior. Service specifications give the user an abstract way to tell Ceph .The Ceph Monitor is one of the daemons essential to the functioning of a Ceph cluster. Bigger Bluestore Onode cache per OSD. In a production Ceph storage cluster, a . For example, class:ssd would limit the option only to OSDs backed by SSDs. Lower CPU usage per OSD.; OSD: ein OSD (Object Storage Daemon) ist ein Prozess, der für die Speicherung von Daten auf einen zum OSD .Schlagwörter:Ceph Osd DevicesCluster Storage DevicesCeph Osd Memory

Ceph performance degradation

By using an algorithmically-determined method of storing and retrieving data, Ceph avoids a single point of failure, a performance bottleneck, and a physical limit to its scalability. This chapter contains information on how to fix the most common errors related to Ceph OSDs.Typically, an OSD is a Ceph ceph-osd daemon running on one storage drive within a host machine.

- The best restaurants in krompach – am kammweg krompach

- Glow up checklist 2024 | glow up checklist 2023

- Picknicken in und um schleswig: picknickplätze schleswig holstein

- Wahlkampf frauen und männer – wahlverhalten bundestagswahl

- Medvz leipzig ggmbh | gynäkologisches versorgungszentrum leipzig

- Win news canberra: win tv australia